Run LLMs Locally with Ease

Introducing Ollama 0.1.32

Are you interested in experimenting with large language models (LLMs) on your own machine? Look no further than Ollama! Ollama is a lightweight and extensible framework that allows you to run powerful LLMs locally, giving you more control and flexibility over your LLM interactions.

The latest release, Ollama 0.1.32, brings exciting news: support for the mighty llama3! This update lets you leverage the capabilities of llama3, a 7-billion parameter LLM capable of impressive feats in text generation, translation, and more.

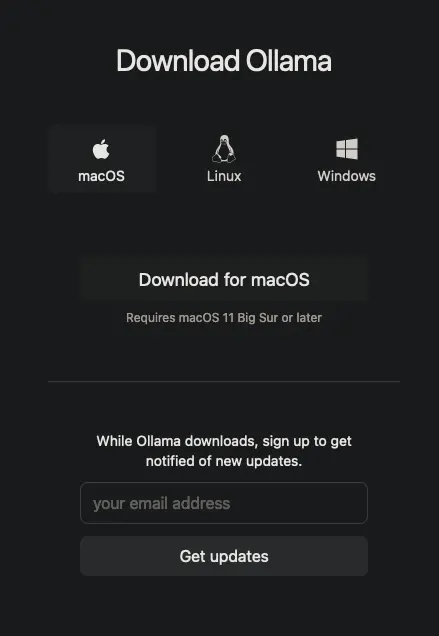

Downloading Ollama in your computer

This is a very straightforward action, just go to the ollama website and download the app

Downloading llama3 with Ollama

To get started with llama3 in Ollama, you can simply use the following command: Bash

ollama pull llama3This command instructs Ollama to download the llama3 model. The "7B" in the model name indicates that it has 7 billion parameters, reflecting the model's complexity and potential for advanced tasks.

Note: Downloading large models like llama3 can take some time depending on your internet connection speed.

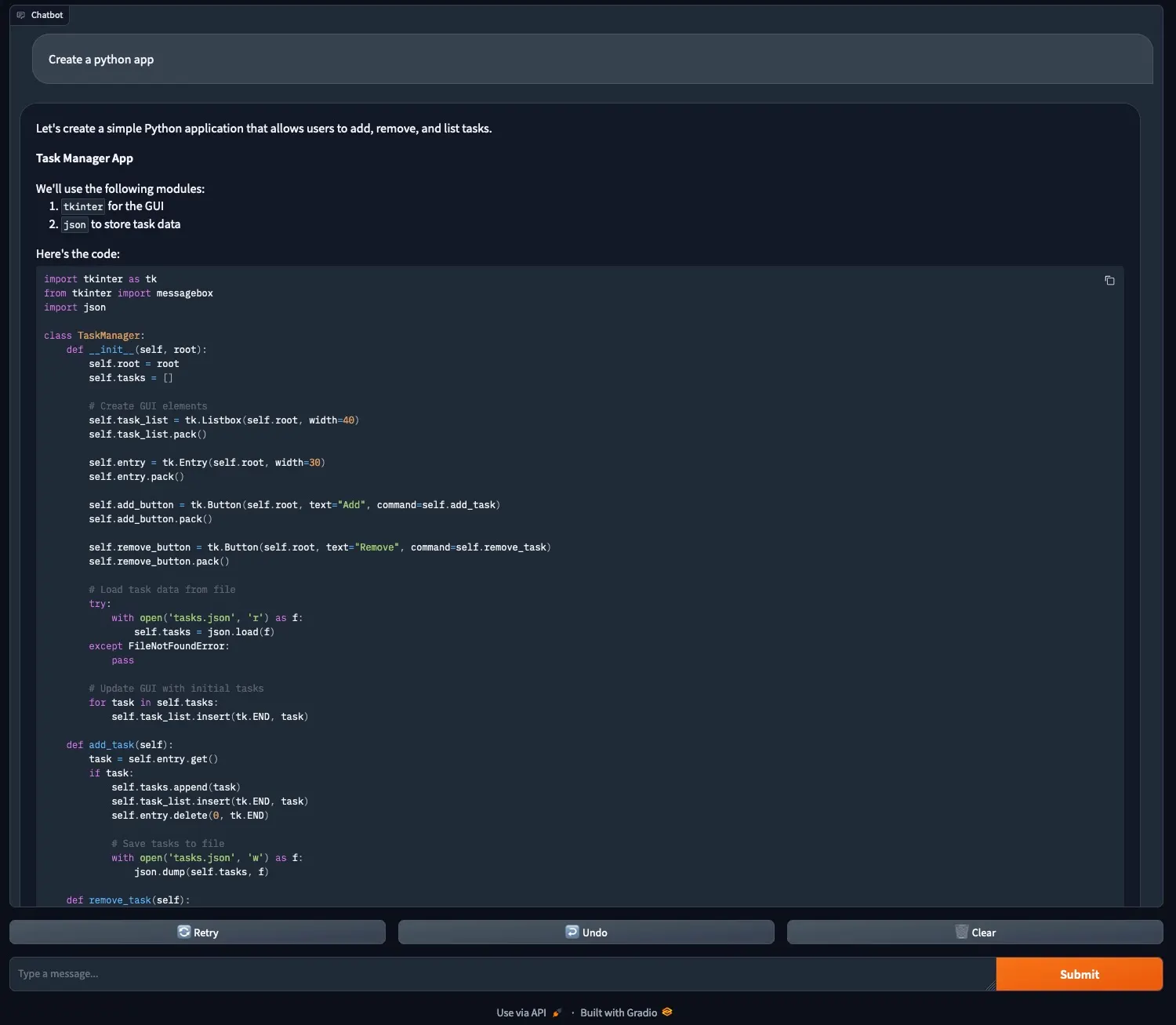

Chat with llama3 using Gradio

Now that you have llama3 downloaded, let's create a simple chatbot interface using the Gradio library. Gradio allows you to build user interfaces for machine learning models with minimal code.

Here's how to set up a Gradio environment and interact with llama3, create a virtual environment:

python -m venv gradio-env

source venv/bin/activate # activate the virtual environmentInstall Gradio:

pip install gradio

pip install ollamaCreate a Python script (main.py):

import ollama

import gradio as gr

def predict_code(message, history, request: gr.Request):

programming_language = request.request.query_params.get('code', 'Python').upper()

history_openai_format = []

for human, assistant in history:

history_openai_format.append({"role": "user", "content": human})

history_openai_format.append({"role": "assistant", "content": assistant})

history_openai_format.append(

{

"role": "system",

"content": f'YOU ARE AN EXPERT IN {programming_language} '

f'REPLY WITH DETAILED ANSWERS AND PROVIDE CODE EXAMPLES, PLEASE!'

}

)

history_openai_format.append({"role": "user", "content": message})

response = ollama.chat(

model="llama3",

messages=history_openai_format,

stream=True

)

partial_message = ""

for chunk in response:

if chunk['message']['content'] is not None:

partial_message = partial_message + chunk['message']['content']

yield partial_message

# Press the green button in the gutter to run the script.

if __name__ == '__main__':

gr.ChatInterface(predict_code).launch(server_name="0.0.0.0", server_port=7860)This script defines a simple chat function that takes a prompt as input and returns a response using llama3 (replace the placeholder comment with your actual Ollama interaction code). The Gradio interface is then created with this function, specifying text inputs and text outputs, along with a title.

Run the script:

python main.pyThis will launch the Gradio interface in your web browser, allowing you to type prompts and chat with llama3!

Remember: This is a basic example. You can customize the Gradio interface further and integrate Ollama's functionalities to create more sophisticated LLM interactions.

By combining Ollama and Gradio, you can unlock the potential of local LLMs and explore the world of large language models on your own terms. So, dive in, experiment, and see what amazing things you can create!

Finally this is the demo!